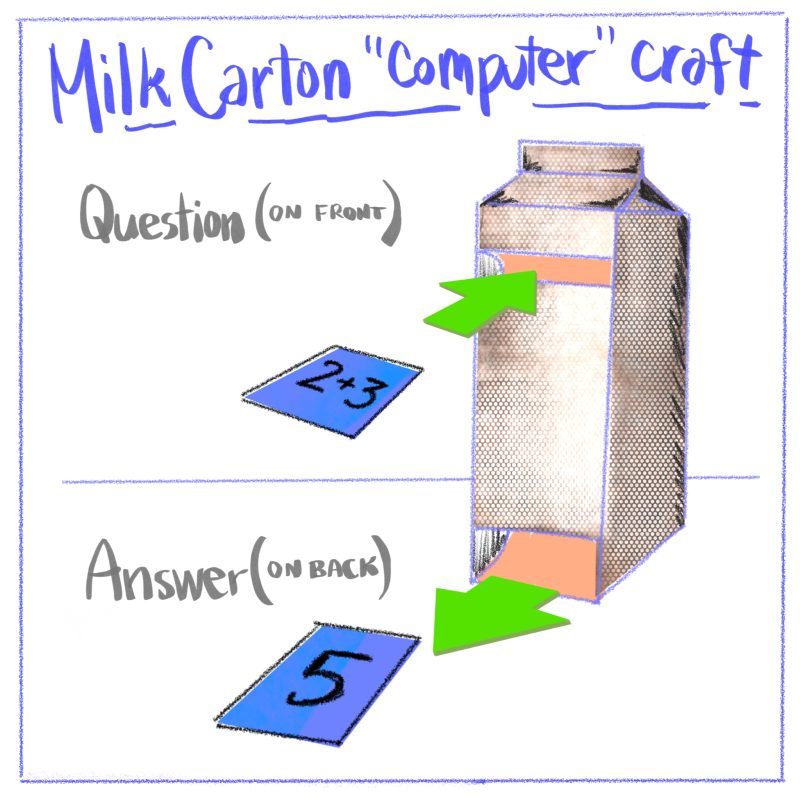

I’m pretty sure the first time I had my hands on a “computer” I made it myself. It was made from a milk carton and it was in the 1970s.

The way it worked was to write a question on one side of a card and the answer on the back. It was a fancy cardboard “flash card” viewer.

Even as a child I knew this was not really a computer. The question and answer was predetermined. It could not answer a new and interesting question.

Years later I encountered an Apple ][ in Mrs E’s 8th grade homeroom. The computer could make graphics and do calculations and make things to read on screen and send things to a printer. And whether it was a calculator or a clock or a game like Oregon Trail or a word processor it was all computer code written by people. I could write 10 PRINT "HELLO" and 20 GOTO 10 and make it do things.

But you put in INPUT and got out OUTPUT and in theory there is a sensible relationship between those two things.

Back in October I wrote Artificial Intelligence is not that.

Lately the idea of “AI” is that it is a magic conversationalist that knows everyone and can answer any question. Yesterday, it was not operating that way. People and news sites described it thus:

- ChatGPT has meltdown

- ChatGPT starts sending alarming messages to users

- ChatGPT went berserk

- ChatGPT spat out gibberish

- ChatGPT going crazy

- ChatGPT went haywire

- ChatGPT starts spouting nonsens

- ChatGPT off the rails

- ChatGPT went full hallucination mode

- ChatGPT has gone mad today

- ChatGPT has gone berserk

The incident status page describes described it more dispassionately:

- Feb 20, 2024 – 1547 PST

- Investigating We are investigating reports of unexpected responses from ChatGPT.

- Feb 20, 2024 – 1547 PST

- Identified The issue has been identified and is being remediated now.

- Feb 20, 2024 – 1659 PST

- Monitoring We’re continuing to monitor the situation.

- Feb 21, 2024 – 0814 PST

- Resolved ChatGPT is operating normally.

It feels strange to read about code and algorithms as having a meltdown or going mad. That doesn’t sound like a machine. “Went berserk” reads like a mental health problem, not a computer malfunction.

ChatGPT seems more capable than that old milk carton computer, but when ChatGPT output reads like output from a hallucinating person–clearly it’s not better, and might be far worse.

I don’t have any final thoughts on this other than to be vaguely troubled about how we talk about these devices. I leave you with a quote from the writings of Alan Turing, the whole thing is worth a read to get the context of that famed Turing test that comes up often in discourse about advanced computation: em>Mind, Volume LIX, Issue 236, October 1950, Pages 433–460 [source]

An interesting variant on the idea of a digital computer is a ‘digital computer with a random element’. These have instructions involving the throwing of a die or some equivalent electronic process; one such instruction might for instance be, ‘Throw the die and put the resulting number into store 1000’. Sometimes such a machine is described as having free will (though I would not use this phrase myself). It is not normally possible to determine from observing a machine whether it has a random element, for a similar effect can be produced by such devices as making the choices depend on the digits of the decimal for π.

Most actual digital computers have only a finite store. There is no theoretical difficulty in the idea of a computer with an unlimited store. Of course only a finite part can have been used at any one time. Likewise only a finite amount can have been constructed, but we can imagine more and more being added as required. Such computers have special theoretical interest and will be called infinitive capacity computers.